IJNSA 01

HYPERPARAMETER TUNING-BASED OPTIMIZED PERFORMANCE ANALYSIS OF

MACHINE LEARNING ALGORITHMS FOR NETWORK INTRUSION DETECTION

Sudhanshu Sekhar Tripathy and Bichitrananda Behera

Department of Computer Science and Engineering, C.V. Raman Global University,

Bhubaneswar, Odisha, India

ABSTRACT

Network Intrusion Detection Systems (NIDS) are essential for securing networks by identifying and mitigating unauthorized activities indicative of cyberattacks. As cyber threats grow increasingly sophisticated, NIDS must evolve to detect both emerging threats and deviations from normal behavior. This study explores the application of machine learning (ML) methods to improve the NIDS accuracy through analyzing intricate structures in deep-featured network traffic records. Leveraging the 1999 KDD CUP intrusion dataset as a benchmark, this research evaluates and optimizes several ML algorithms, including Support Vector Machines (SVM), Naïve Bayes variants (MNB, BNB), Random Forest (RF), k-Nearest Neighbors (k-NN), Decision Trees (DT), AdaBoost, XGBoost, Logistic Regression (LR), Ridge Classifier, Passive-Aggressive (PA) Classifier, Rocchio Classifier, Artificial Neural Networks (ANN), and Perceptron (PPN). Initial evaluations without hyper-parameter optimization demonstrated suboptimal performance, highlighting the importance of tuning to enhance classification accuracy. After hyper-parameter optimization using grid and random search techniques, the SVM classifier achieved 99.12% accuracy with a 0.0091 False Alarm Rate (FAR), outperforming its default configuration (98.08% accuracy, 0.0123 FAR) and all other classifiers. This result confirms that SVM accomplishes the highest accuracy among the evaluated classifiers. We validated the effectiveness of all classifiers using a tenfold cross-validation approach, incorporating Recursive Feature Elimination (RFE) for feature selection to enhance the classifiers accuracy and efficiency. Our outcomes indicate that ML classifiers are both adaptable and reliable, contributing to enhanced accuracy in systems for detecting network intrusions.

KEYWORDS

Machine learning classification systems, Network intrusion detection mechanism, KDD CUP 1999 data

repository, Hyper-parameter tuning, Performance evaluation, Classification accuracy.

1. INTRODUCTION

The rapid growth of digital technology has improved efficiency and connectivity but also intensified sophisticated cyber threats such as ransomware, phishing, and DoS attacks. With over 90% of critical operations relying on online platforms, ensuring the confidentiality, integrity, and availability of digital assets is vital. To address these challenges, researchers are developing advanced Network Intrusion Detection Systems (NIDS) using machine and deep learning for real-time anomaly detection and proactive defense. The integration of Artificial Intelligence (AI), especially Machine Learning (ML), has greatly enhanced Network Intrusion Detection Systems (NIDS). These systems analyze network traffic to distinguish normal and malicious activities, detecting zero-day attacks that evade traditional defenses. According to NIST, intrusion detection ensures data confidentiality, integrity, and availability through continuous monitoring and anomaly detection [1]. Unlike signature-based systems, anomaly-based NIDS effectively identify previously unseen attacks by learning normal traffic behavior.

Recent advances in machine learning (ML) and deep learning (DL) have enhanced IDS performance through adaptive, data-driven detection with fewer false positives [2] [3]. Techniques like SVM, RF, DT, KNN, XGBoost, AdaBoost, and BPN are widely used, while CNNs and RNNs capture complex traffic patterns [4]. Hyperparameter tuning and feature engineering optimize accuracy and scalability [5] [6]. Using the KDD Cup 1999 dataset, this study evaluates multiple classifiers based on accuracy, precision, recall, F1-score, false alarm rate, and detection rate to strengthen IDS robustness.

Figure 1A snapshot of systems for detecting network intrusions

Fig. 1 illustrates the system layout of a system for detecting network intrusions, engineered to observe and assess network traffic, identifying potential intrusions or suspicious activities. The system connects to the internet through a firewall that filters traffic according to established security rules. NIDS sensors are positioned at both external and internal points to examine network packets. Traffic is routed through a switch that connects various workstations within the network. When anomalies or suspicious behavior are detected, the NIDS sends alerts to monitoring servers, which evaluate the threat’s severity and manage response actions. This centralized setup enables continuous surveillance, strengthening the network’s defense against cyber-attacks by allowing real-time threat detection and proactive response.

This research highlights the following major contributions:

- The initial investigation establishes baseline performance metrics for machine learning classifiers applied to systems for detecting network intrusions using the 1999 KDD Cup intrusion detection dataset without hyper-parameter tuning. A diverse range of ML algorithms was systematically assessed through tenfold cross-validation without applying any feature selection techniques. This analysis provides critical perspectives on their performance, highlighting capabilities and restrictions and emphasizing the need for further optimization to improve accuracy and lower false alarm rates. The findings serve as a valuable benchmark for guiding future advancements in network security solutions.

- The second investigation applies sophisticated hyper-parameter optimization strategies like grid search and random search to boost the performance of ML classifiers. Subsequently, all classifiers were evaluated through tenfold cross-validation with RFE, improving accuracy, efficiency, and emphasizing essential features. The results clearly show that systematic tuning of hyper-parameter configurations leads to significant improvements, enhancing detection accuracy while minimizing the rate of incorrect positive detections This investigation emphasizes the importance of hyper-parameter optimization in improving the durability and trustworthiness of systems for detecting network intrusions, contributing to facilitating the progress of more effective and efficient classifiers for practical deployment in network security applications.

Section 2 presents a comprehensive investigation into recent advancements in systems for detecting network intrusions, emphasizing a critical analysis of methodologies, emerging trends, prevailing challenges, and a systematic comparative evaluation of relevant research studies. Section 3 outlines an enhanced framework for detecting network intrusions utilizing the 1999 KDD intrusion detection dataset. It provides a detailed depiction of a machine learning-based NIDS architecture, emphasizing its procedural framework components and optimization strategies. Section 4 presents an ML-driven design for detecting network intrusions, emphasizing the impact of prominent ML classifiers in improving detection efficiency and ensuring comprehensive performance evaluation. Section 6 clarifies the experimental setup and performance evaluation executed utilizing the 1999 KDD Cup intrusion dataset. It includes an analysis of confusion matrices, hyper-parameter tuning, and a comparative analysis of results

before and after optimization, emphasizing the influence of hyper-parameter optimization on accuracy and false positive rates. Section 7 wraps up by outlining the significant outcomes of the study and proposing future avenues of research to advance next-generation NIDS.

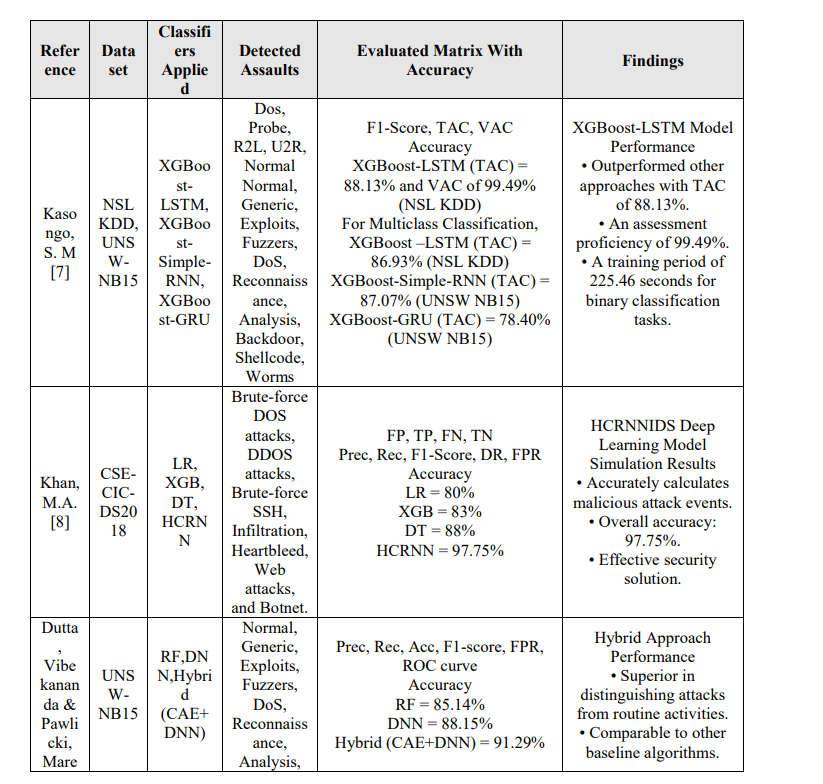

2. RELATED WORK

A study [7] employed an XGBoost-based feature selection approach, identifying 17 and 22 optimal features for the NSL-KDD and UNSW-NB15 datasets, respectively. The XGBoostLSTM hybrid achieved 99.49% validation accuracy and 88.13% test accuracy on NSL-KDD, while XGBoost-Simple-RNN attained 87.07% on UNSW-NB15. Another study [8] introduced HCRNNIDS, a hybrid CRNN integrated with logistic regression, decision trees, and XGBoost, achieving 97.75% accuracy on CSE-CIC-DS2018 and outperforming several traditional and deep learning IDS models. In [9], a hybrid anomaly detection model integrating a classical autoencoder (CAE) with a deep neural network (DNN) was applied to the UNSW-NB15 dataset. The CAE enhanced DNN performance through sparse feature extraction, achieving 91.29%

accuracy and outperforming baseline models. Similarly, [10] proposed a deep learning-based IDPS using an MLP trained on KDD CUP 1999, optimized with Adam, achieving 91.4% accuracy compared to DT (74%) and SVM (83%) classifiers.

In [11], a hybrid IoT intrusion detection model combined random forest-based feature selection with neural classifiers (B-ANN and DR-NN), achieving 98% accuracy on KDD CUP 1999 and demonstrating strong adaptability across intelligent networks. Similarly, [12] evaluated NB, DT, KNN, RF, SVM, MLP, and LSTM on NSL-KDD, reporting accuracies of 89.6% (with scaling), 89.2% (without), 96.89% (MLP), and 97.77% (LSTM), confirming LSTM’s superiority in modeling temporal dependencies. The study in [13] highlighted CNNs as highly effective for IoT intrusion detection, demonstrating deep learning’s advantage over traditional methods. In [14], DNN and LSTM models on NSL-KDD showed that a three-layer LSTM with 32 neurons per layer achieved 98.3% accuracy, outperforming enlarged DNNs and conventional models. Reference [15] applied a deep autoencoder for five-class IDS on NSL-KDD, achieving 99%

training and 91.28% testing accuracy. In [16], an LSTM-based IDS on CIDDS reached 0.85

accuracy, surpassing SVM, MLP, and Naïve Bayes.

A CNN-IDS in [17] applied dimensionality reduction on KDD 1999 data, converting traffic into image-like representations to reduce complexity. Results demonstrated higher accuracy and lower FAR compared with conventional methods. The study in [18] proposed a deep belief network (DBN) framework optimized with PSO, clustering, and genetic operators, reducing detection time by 24.69% and improving five-class accuracy by 1.3–14.8%. An improved LeNet-5 CNN in [19] integrated normalization and one-hot encoding, achieving over 99% training and evaluation accuracy with FAR below 0.1%, emphasizing reliability and precision. DL-IDS in [20] combined CNN and LSTM for feature extraction, with category weight optimization to handle class imbalance. On CICIDS2017, multi-class accuracy reached 98.67%, with over 99.5% for certain attack classes, showing its effectiveness for diverse intrusion patterns. In [21], a DBN-ELM hybrid applied feature extraction and classification on NSL-KDD, using majority voting to refine

predictions, achieving 97.82% accuracy and a 1.81% false alarm rate, outperforming individual DBN or ELM models. The deep multilayer framework in [22], incorporating feedback, autoencoding, preprocessing, database management, and classification, attained 96.70% accuracy on NSL-KDD, highlighting the advantage of integrated architectures. In [23], a stacked nonsymmetric deep autoencoder (NDAE) enhanced unsupervised feature extraction on KDD 1999 and NSL-KDD, significantly improving detection performance over traditional NIDS.

3. AN OPTIMAL APPROACH TO SYSTEMS FOR DETECTING NETWORK INTRUSION APPLYING THE 1999 KDD CUP DATASET

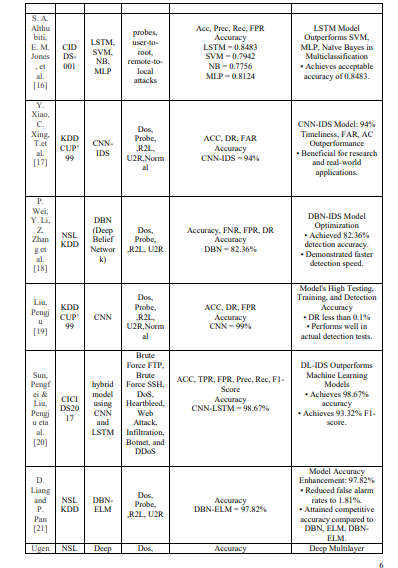

Fig 2 ML Based NIDS architecture

Fig. 2 depicts the optimal workflow for NIDS using the 1999 KDD Cup intrusion dataset, which involves several well-defined steps to support accurate and timely detection and classification of cyber intrusions targeting network infrastructure:

Dataset Utilization: The 1999 KDD Cup intrusion dataset serves as the foundational input applicable to the NIDS architecture. It is broadly adopted for detecting malicious intrusions in networks, offering a diverse range of assigning network traffic data to normal activity or specific cyberattack classifications.

Data Pre-Processing: The raw data undergoes pre-processing to enhance its quality. This step includes handling missing values, eliminating redundant logs, and applying normalization or scaling to features. Pre-processing verifies that the dataset remains accurate and prepared for evaluation.

Feature Selection: Before model training, Recursive Feature Elimination (RFE) was utilized to optimize the feature space, ensuring that only the most relevant attributes were used in classification. By iteratively eliminating less significant features, RFE streamlines the dataset, which leads to faster processing times and improved model accuracy. This targeted approach allows classifiers to focus on essential indicators of network intrusions, thereby strengthening their detection capabilities.

Data Splitting: The dataset is divided into separate segments for training and evaluating the classifier. The training set is used to build and fine-tune the ML classifiers, while the testing set assesses how well the trained model performs and generalizes to unseen data.

Classifier Training with ML Algorithms: Multiple ML algorithms are trained on the dataset to uncover patterns and correlations in the network traffic. This allows the models to accurately classify traffic as either normal or belonging to specific types of attacks.

Hyper-parameter Optimization: Optimization of hyper-parameters is implemented to finetune the classifier’s accomplishment. This process includes fine-tuning parameters like the learning rate, the size of estimators in ensemble methods, or the depth of decision trees to achieve the best possible results.

Trained Classifier: Using the optimal hyper-parameters, the classifier is modeled using the training data inputs. This yields a classifier capable of accurately forecasting the category of novel, unobserved occurrences based on learned patterns.

Multi-Class Prediction: The trained classifier generates predictions for each instance, assigning them to one of the following categories:

0: Normal Activity

1: Denial-of-Service (DoS) Attack

2: Probing/Scanning Attempt

3: Remote-to-Local (R2L) Intrusion

4: User-to-Root (U2R) Privilege Escalation

Decision Block (Normal or Attack): A decision block is implemented to verify whether the prediction corresponds to the “Normal” class (prediction = 0). If the prediction equals 0, the instance is classified as normal. Otherwise, if the prediction matches any attack class, the instance is categorized as an attack

Attack Classification: For instances categorized as attacks, the system further classifies them into specific attack types such as DoS, probe, R2L, or U2R. This fine-grained classification enables precise identification and differentiation of attack types within the broader category of malicious activities.

4. NETWORK INTRUSION DETECTION SYSTEMS WITH ML CLASSIFIERS

4.1. Classifiers and Techniques in Machine Learning

Machine learning enhances NIDS by enabling autonomous intrusion detection through datadriven pattern recognition [24], [25]. Supervised learning offers high accuracy using labeled data [26], while unsupervised learning detects anomalies without labels but with lower accuracy [27]. Both approaches improve NIDS performance, strengthen security, and reduce false positives [28].

4.2. Classification Approach Using Support Vector Machines

SVMs are widely used in NIDS for their high accuracy in detecting and classifying network anomalies. They classify data by finding a maximum-margin separator between normal and malicious traffic, relying on support vectors for efficiency even with limited training data. For non-linear patterns, kernel methods map inputs to higher-dimensional spaces, enabling complex decision boundaries [29]. This approach minimizes classification errors and false positives, making SVMs robust and versatile for both linear and non-linear intrusion detection scenarios.

4.3. Probabilistic Learning Classifier Using Naïve Bayes

The Naive Bayes classifier, based on Bayes’ theorem, predicts class probabilities by assuming conditional independence among features. Variants such as Multinomial Naive Bayes (MNB) handle count data, while Bernoulli Naive Bayes (BNB) processes binary features. In NIDS, it is valued for simplicity, scalability, and computational efficiency, enabling effective analysis of high-dimensional network data. Despite the strong independence assumption, Naive Bayes reliably differentiates normal from malicious connections, providing a lightweight intrusion detection solution [30].

Where:

T: Observed features or data.

S: Target class or category.

P(S|T): Probability of class S given data T.

P(S): Prior probability of class S.

P(T|S): Probability of data T given class S.

P(T): Overall probability of da

4.4. Classification Technique Using a Decision Tree

Decision trees (DTs) are a popular supervised learning method for classification and regression, structured as hierarchical trees with internal nodes for feature-based decisions, branches for outcomes, and leaves for predictions. In NIDS, DTs effectively detect normal and malicious traffic using features such as connection duration, protocol, and service type. Their interpretability and feature-driven decision process allow efficient handling of complex datasets, providing accurate and real-time intrusion detection with computational efficiency in large-scale networks [31].

4.5. K-Nearest Neighbor based Classification Technique

K-Nearest Neighbors (KNN) is a non-parametric, distance-based, instance-based learning method widely used in NIDS for its simplicity and effectiveness. It classifies a data point based on the majority label among its K nearest neighbors, using metrics such as Euclidean distance. By comparing network connections with labeled training instances, KNN identifies normal and malicious patterns. Although computationally intensive for large datasets, techniques like dimensionality reduction and approximate neighbor search enhance its scalability and efficiency [32].

4.6. Classification Approach Using Logistic Regression

Logistic Regression (LR) is a supervised algorithm used in intrusion detection to classify network traffic as normal or malicious. It applies the logistic function to generate outputs between 0 and 1, estimating the probability of each class and making predictions based on a threshold. LR is efficient, interpretable, and computationally lightweight, providing probabilistic predictions. However, its simplicity may limit performance on complex, high-dimensional data, where more advanced models often perform better [33].

In logistic regression, a linear model is derived from the provided attributes and processed through a sigmoid curve, resulting in a probabilistic output. The sigmoid function is mathematically expressed as:

F(x) = 1 / 1+e-x (2)

In this equation, F(x) yields a probability between 0 and 1, with “e” standing for the natural exponential base, and “x” acting as the function’s input.

4.7. Classifier Using Linear Discriminant Analysis Technique

Linear Discriminant Analysis (LDA) is a supervised method used in intrusion detection to classify network traffic and reduce feature dimensionality. It maximizes differences between classes while minimizing within-class variance, identifying linear combinations of features that enhance separability. LDA effectively classifies traffic into normal or specific attack types, supports multi-class detection, and improves computational efficiency by preserving class separability in lower-dimensional space [34].

4.8. Optimized Extreme Gradient Boosting (XGBOOST) Classifier

XGBoost is a scalable gradient boosting algorithm widely used in network-level intrusion detection for its efficiency with large and complex datasets. It combines multiple weak learners, typically decision trees, to iteratively improve predictive performance by correcting previous errors. This approach effectively handles high-dimensional and imbalanced data, enabling accurate detection of diverse and novel intrusion types, making XGBoost a robust solution for precise NIDS implementation [35].

4.9. AdaBoost Classifier

AdaBoost is a boosting algorithm commonly used in network intrusion detection for its ability to improve accuracy by combining weak learners into a strong classifier. It assigns higher weights to misclassified instances, ensuring subsequent models focus on difficult or ambiguous patterns. This adaptive approach reduces false positives and effectively handles high-dimensional, imbalanced network data, enhancing detection of normal and malicious activities, including emerging or unknown threats

4.10. Random Forest Classifier

Random Forest (RF) is an ensemble learning method widely used in network-layer intrusion detection for its accuracy and robustness against overfitting. It constructs multiple decision trees on varied data subsets and aggregates their predictions, capturing complex, non-linear patterns in high-dimensional NIDS datasets. RF effectively detects both known and zero-day threats, handles imbalanced data, and ranks critical features to enhance accuracy while reducing computational demands [36].

4.11. Artificial Neural Network (ANN)

Artificial Neural Networks (ANNs) are widely used in network intrusion detection for their ability to model complex, non-linear data. They comprise an input layer for network features, hidden layers for feature extraction, and an output layer for classification. Neurons are interconnected with weighted links, and activation functions such as ReLU, Sigmoid, Tanh, and Softmax process inputs. Methods like Perceptron, SGD, and backpropagation optimize the network by minimizing errors. Deep ANN architectures improve detection accuracy, enhance system performance, and reduce false alarms [37].

4.12. Ridge Classifier

The Ridge classifier assumes that data points of each class lie within a linear subspace, enabling continuous analysis for classification [38]. In NIDS, it addresses multicollinearity among network features through L2 regularization, stabilizing predictions and reducing variance. By controlling model complexity, Ridge regression minimizes overfitting and ensures accurate, reliable detection of network anomalies, making it suitable for high-dimensional intrusion detection tasks.

4.13. Passive Aggressive (PA) Classifier

Passive-Aggressive (PA) classifiers are scalable online learning algorithms that update models incrementally as new data arrives, unlike traditional batch methods. In NIDS, they adapt to evolving network conditions by processing streaming data efficiently. Using a regularization parameter (C) instead of a learning rate, PA classifiers penalize misclassifications to balance accuracy and model simplicity. This enables real-time anomaly detection with low computational overhead, making them well-suited for high-traffic networks [39].

4.14. Rocchio (RC) Classifier

The Rocchio algorithm, originating from relevance feedback in information retrieval, is applied in NIDS for classification. During training, it computes a centroid for each class as a prototype. In testing, class labels are assigned based on the Euclidean distance between incoming data points and centroids. This proximity-based method efficiently detects anomalies, distinguishing normal traffic from potential intrusions while helping minimize false positives.

5. RESULTS AND DISCUSSION

5.1. Experimental Setup

Machine learning computations were performed using Python’s Scikit-learn library. Experiments were conducted on Google Colaboratory, a cloud-based platform equipped with a Tesla K20 GPU (2,496 CUDA cores, 16 GB RAM, and 500 GB storage), as well as locally on a Windows 11 system powered by an Intel Core i5-1240P processor (4.40 GHz, 12th generation), identified as DESKTOP-UFN62J4. This dual setup facilitated a comprehensive evaluation of machine learning classifiers in both cloud and local computing environments.

5.2. Dataset Description

NIDS monitor network activity to identify abnormal patterns indicative of security threats while allowing normal traffic. Machine learning classifiers, trained on datasets containing both normal and attack patterns, improve detection by recognizing diverse network behaviors. In this study, the 1999 KDD Cup dataset was used, with 70% of data for training and 30% for testing to preserve class distribution. A 10-fold cross-validation was also applied to rigorously evaluate model accuracy, generalization, and robustness against overfitting.

5.3. The 1999 KDD CUP Intrusion Dataset

The 1999 KDD Cup intrusion dataset is a widely used benchmark for evaluating network intrusion detection mechanisms. Developed for the KDD 1999 data mining challenge, it contains simulated network traffic with both normal and malicious connections. The dataset includes approximately 4.9 million records, each described by 41 features capturing key aspects of network behavior, such as connection duration, protocol type, and error rate, making it a foundational resource for anomaly detection and machine learning in cybersecurity. The 1999 KDD Cup dataset categorizes malicious activity into four types: Denial of Service (DoS), Remote-to-Local (R2L), User-to-Root (U2R), and Probe attacks. Normal network traffic is also included to provide a baseline for training and evaluation. The dataset is typically split into training and testing subsets, with cross-validation used to assess detection performance. Despite

criticisms such as data redundancy, it remains a widely accepted benchmark for developing and evaluating NIDS.

(i) Denial-of-Service (DoS) Assaults: These types of exploits focus on disrupting network infrastructure or system operations by inundating them with excessive traffic or requests, such as in a SYN Flood attack.

(ii) Remote-to-Local (R2L) Intrusion: These occur when a malicious agent gains access to a local computer remotely without valid credentials, often through techniques like password cracking.

(iii) Probing/Scanning Attempt: These involve reconnaissance activities aimed at collecting information about a network’s structure and identifying vulnerabilities, such as through port scanning.

(iv) User-to-Root (U2R) Privilege Escalation: In these breach attempts, an intruder attempts to elevate privileges from a regular user account to administrator (root) access, often using methods like buffer overflow exploits.

Fig 3Feature and Label Structure of the 1999 KDD Cup intrusion detection dataset

Fig. 3 illustrates the process of loading and displaying the 1999 KDD Cup dataset using Python’s pandas library. The CSV files, kddtrain.csv and kddtest.csv, are imported into DataFrames traindata and testdata, with header=None indicating no header row. The command traindata. head (8) displays the first eight rows, showing 42 columns indexed from 0 to 41. Each row represents a network connection, and each column corresponds to attributes such as protocol type, connection duration, and status.

The 1999 KDD Cup dataset is widely used for detecting malicious network activity and includes four types of features: basic, content, traffic, and class labels, as summarized in Table 2. Basic features describe connection properties, such as duration, protocol type (TCP, UDP, ICMP), service (HTTP, FTP), flags, and data transfer metrics (src_bytes, dst_bytes). Content features capture connection-level activities, including failed logins, user login status, and system-level actions like root_shell or su_attempted. Traffic features aggregate session details, such as number of shells, accessed files, and login types (host_login, guest_login). Class labels distinguish normal traffic from attacks, including DoS, R2L, U2R, and Probe. These features are critical for training machine learning classifiers for effective intrusion detection.

Table 2Highlights the frequency distribution of cases among multiple attack classes in the 1999 KDD Cup intrusion dataset

5.4. Performance Evaluation Metrics of NIDS

Evaluating network monitoring and intrusion detection systems is crucial for enhancing threat detection, refining algorithms, reducing false positives, and ensuring operational reliability. Performance is measured using metrics such as Accuracy (Acc), Precision (Prec), Recall (Rec), F1-score, False Alarm Rate (FAR), and Detection Rate (DR). Table 3 summarizes four key outcomes: true positives (TP), false positives (FP), true negatives (TN), and false negatives (FN), which form the basis for performance assessment. A confusion matrix organizes these outcomes, allowing computation of the key metrics and providing a structured framework for evaluating machine learning classifiers in intrusion detection.

True Positive (TP): An intrusion attempt is correctly recognized by the system as malicious,

confirming successful threat detection.

False Positive (FP): Benign traffic is incorrectly flagged as a threat, triggering an unnecessary

alert.

True Negative (TN): Safe network activity is accurately classified as non-malicious, resulting in

no false warning.

False Negative (FN): A harmful activity passes through undetected and is wrongly classified as

legitimate, signifying a lapse in the detection mechanism.

Table 3Calculating NIDS Performance Metrics

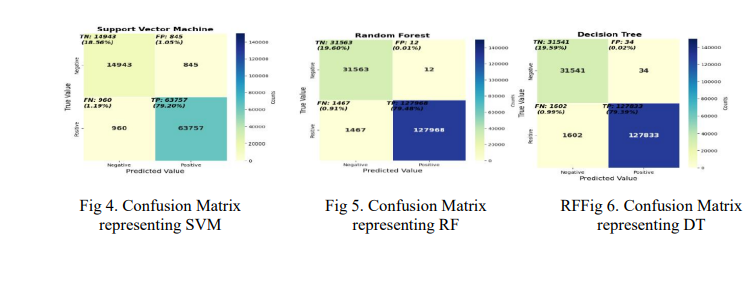

5.5. Confusion Matrices for ML Classifiers on the 1999 KDD Cup Intrusion Dataset

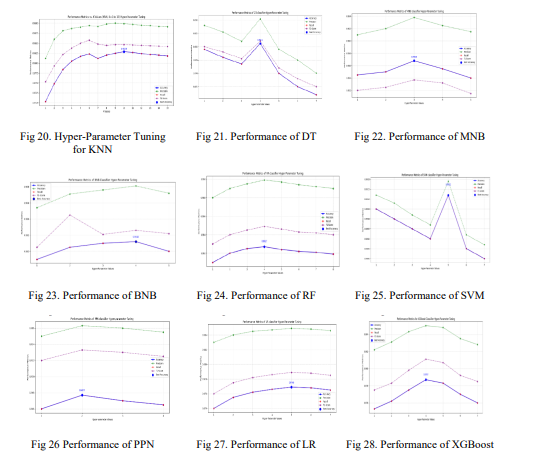

5.6. Hyper-Parameter Tuning

In the experimental setup shown in Table 4, hyper-parameter optimization is employed to improve NIDS detection accuracy by fine-tuning key parameters. Techniques such as Grid Search and Random Search systematically explore parameter combinations to identify optimal settings. Crossvalidation assesses classifier generalization across datasets, mitigating overfitting and enhancing reliability in detecting malicious activities. This tuning ensures effective real-world performance, balancing accurate detection with minimal false alarms.

Table 4Setup of Hyper-parameters for Different Classification Techniques

5.7. Results Before Hyper-Parameter Optimization

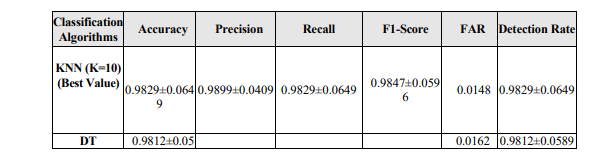

This section presents evaluation results of various machine learning methods for intrusion detection using the 1999 KDD Cup dataset, implemented with Scikit-learn. A ten-fold crossvalidation was employed, dividing the dataset into ten equal parts, with nine folds for training and one for testing in each iteration. Performance metrics were averaged across folds to assess consistency. All classifiers were first evaluated using Scikit-learn’s default hyper-parameters to establish baseline performance prior to hyper-parameter tuning.

Table 5Performance comparisons of ML classifiers on the 1999 KDD Cup intrusion dataset for Network IDS before Hyper-Parameter Optimization

The average values and standard deviations of the classification outcomes are shown in Table 5

Table 5 summarizes the detection performance of various ML classifiers on the 1999 KDD Cup dataset using default parameters, considering accuracy and false alarm rate (FAR). SVM achieved the highest accuracy of 98.08% with the lowest FAR of 0.0123, demonstrating superior intrusion detection. KNN, RF, BPN, and DT also performed well, with accuracies above 97% and low FARs, while SGD had the lowest accuracy (90.46%) and higher FAR (0.0424). MNB recorded 93.29% accuracy with FAR 0.0383, and XGBoost, AdaBoost, and Ridge ranged

between 94–95% accuracy. Fig. 19 illustrates these results, highlighting SVM’s superior performance and SGD’s relative ineffectiveness in its default configuration.

Fig. 19 Performance Comparisons of ML Classification Algorithms before hyper-parameter optimization in NIDS

5.8. Results After Hyper-Parameter Optimization

This section evaluates the performance of multiple ML classifiers on the 1999 KDD Cup intrusion dataset, a widely used benchmark in NIDS research. Classifiers were implemented using Scikit-learn and assessed via ten-fold cross-validation, splitting the dataset into ten folds with nine for training and one for testing per iteration to reduce variance and overfitting. To optimize the feature space and improve classifier efficiency, Recursive Feature Elimination (RFE) was applied to retain the most significant features while discarding less impactful ones. Combining RFE with cross-validation provides insights into classifier generalization and realworld applicability.

The key hyper-parameters for each classifier, including learning rate, maximum tree depth, and regularization factors, were initially set to Scikit-learn’s default values. Parameters such as alpha for Ridge and C for SVM controlled model complexity and mitigated overfitting. For tree-based models like Random Forest and XGBoost, n_estimators and max_depth was adjusted to balance performance and overfitting. Hyper-parameters were further fine-tuned empirically to optimize generalization and enhance detection of multiple attack types in the 1999 KDD Cup dataset. Tenfold cross-validation provides a reliable and unbiased evaluation of classifiers such as SVM, XGBoost, AdaBoost, RF, BNB, MNB, LR, KNN, DT, and BPN. This method enables assessment of key performance metrics, including Accuracy, Precision, Recall, F1-score, FAR, and DR, offering a comprehensive view of each classifier’s effectiveness. Comparing these metrics helps identify the most suitable ML approaches for detecting and classifying network

intrusions.

Table 6 Performance comparisons of ML classifiers on the 1999 KDD Cup intrusion dataset for Network IDS with Hyper Parameter Optimization

The average values and standard deviations of the classification outcomes are shown in Table 6

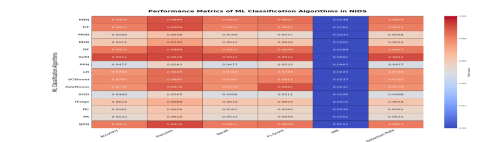

Table 6 highlights SVM as the top-performing classifier, achieving 99.12% Accuracy, 99.19% Precision, 99.12% Recall, 99.12% F1-score, and the lowest FAR of 0.0091. KNN and BPN closely followed with accuracies of 98.29% and 98.21% and low FARs of 0.0148 and 0.0152. DT and RF showed similar reliability, with accuracies of 98.12% and 98.27% and FARs of 0.0162 and 0.0169. Ensemble methods, XGBoost (97.87%) and AdaBoost (97.76%), delivered strong performance, though below SVM. Lightweight classifiers, BNB (96.12%) and MNB (95.68%), were suitable for resource-limited scenarios but had higher FARs (0.0301 and 0.0345). Logistic Regression achieved 97.69% accuracy with FAR 0.0220. PA, SGD, and Perceptron underperformed, with detection rates below 95.50%, limiting their suitability for critical intrusion detection tasks. Figs. 20–34 present line graphs illustrating classifier performance after hyperparameter tuning across various metrics.

Fig 35 Heat map representation of prediction accuracy for different machine learning algorithms in NIDS

5.9. Analysis of ROC Curves for Machine Learning Classifiers on the 1999 KDD Cup Intrusion Data

Fig 36 Performance Evaluation Using ROC Curves: ML Classifiers on the 1999 KDD Cup Intrusion Dataset

Fig. 36 shows ROC analysis on the KDD Cup 1999 dataset, where SVM, BPN, and RF achieved the highest AUC (~0.98). KNN, DT, and BNB followed (0.89–0.94), while XGBoost and AdaBoost performed moderately (0.86–0.88). All models surpassed the random baseline, confirming effective intrusion detection.

Fig 37 Performance Comparisons of ML Classification Algorithms with hyper-parameter optimization in NIDS

Fig. 37 presents a bar chart comparing classifiers across Accuracy, Precision, Recall, F1-score,band FAR. SVM achieves the highest performance, with 99.12% accuracy, strong Precision, Recall, and F1-score, and a minimal FAR, highlighting its effectiveness in detecting network intrusions on the 1999 KDD Cup dataset. KNN, RF, BPN, DT, and LR also show strong results, while ensemble methods like XGBoost and AdaBoost perform well but do not surpass SVM. Linear models such as Ridge and SGD exhibit moderate performance, reflecting challenges in handling dataset complexity. These results underscore SVM’s superiority for NIDS and provide guidance for selecting and tuning classifiers for robust intrusion detection.

6. CONCLUSION AND FUTURE SCOPE

Detecting network intrusions is critical for maintaining cybersecurity, and machine learning (ML) has proven effective in identifying malicious activities within network traffic. Supervised ML algorithms enable systems to distinguish legitimate from suspicious behavior, enhancing protection against evolving threats. Using the 1999 KDD Cup intrusion dataset, this study applied hyper-parameter tuning to optimize classifiers. SVM, KNN, RF, and XGBoost achieved high accuracy and reliable detection rates, while Perceptron (PPN) and Stochastic Gradient Descent (SGD) performed less effectively. Classifiers such as Naïve Bayes, Ridge, and Passive Aggressive showed moderate performance, highlighting variability in algorithm effectiveness for NIDS. As cyber threats evolve, future research will focus on advanced techniques capable of handling large-scale, dynamic datasets. Unsupervised methods, including K-means, OC-SVM, Isolation Forest, DBSCAN, and Autoencoders, are essential for detecting novel attacks, such as zero-day threats, without labeled data. Hybrid approaches combining multiple learning paradigms can improve adaptability and detection accuracy. Moreover, integrating Explainable AI (XAI) will enhance transparency and interpretability, fostering trust in real-world deployment. These advancements promise more adaptive, scalable, and robust intrusion detection systems capable of mitigating increasingly sophisticated cyber threats.

REFERENCES

[1] Mambwe Sydney, Kasongo. (2023). A deep learning technique for intrusion detection system using

a Recurrent Neural Networks based framework. Computer Communications. 199. 10.1016/j.comcom.2022.12.010.

[2] Khan, M.A. HCRNNIDS: Hybrid Convolutional Recurrent Neural Network-Based Network Intrusion Detection System. Processes 2021, 9, 834. https://doi.org/10.3390/pr9050834.

[3] Vibekananda Dutta, Michal Chora ́s, Rafal Kozik, and Marek Pawlicki, “Hybrid Model for Improving the Classification Effectiveness of Network,” Springer, p. 10, 2021.

[4] Yu, D., & Deng, L. (2011). Deep Learning and Its Applications to Signal and Information Processing Exploratory DSP]. IEEE Signal Processing Magazine, 28(1), 145-161. https://doi.org/10.1109/MSP.2010.939038.

[5] Saidane, Samia & Telch, Francesco & Shahin, Kussai & Granelli, Fabrizio. (2024). Optimizing Intrusion Detection System Performance Through Synergistic Hyper-Parameter Tuning and Advanced Data Processing. 10.2139/ssrn.4914947.

[6] Ilemobayo, Justus & Durodola, Olamide & Alade, Oreoluwa & Awotunde, Opeyemi & Adewumi, Temitope & Falana, Olumide & Ogungbire, Adedolapo & Osinuga, Abraham & Ogunbiyi, Dabira & Odezuligbo, E & Edu, Oluwagbotemi & Ifeanyi, Ark. (2024). Hyper-Parameter Tuning in Machine Learning: A Comprehensive Review. Journal of Engineering Research and Reports. 26. 388-395. 10.9734/jerr/2024/v26i61188.

[7] Mambwe Sydney, Kasongo. (2023). A deep learning technique for intrusion detection system using a Recurrent Neural Networks based framework. Computer Communications. 199. 10.1016/j.comcom.2022.12.010.

[8] Khan, M.A. HCRNNIDS: Hybrid Convolutional Recurrent Neural Network-Based Network Intrusion Detection System. Processes 2021, 9, 834. https://doi.org/10.3390/pr9050834.

[9] Vibekananda Dutta, Michal Chora ́s, Rafal Kozik, and Marek Pawlicki, “Hybrid Model for Improving the Classification Effectiveness of Network,” Springer, p. 10, 2021.

[10] Akhil Krishna, Ashik Lal M.A., Athul Joe Mathewkutty, Dhanya Sarah Jacob, Hari M, “Intrusion Detection and Prevention System Using Deep Learning,” IEEE Xplore, p. 6, 2020.

[11] Mangayarkarasi Ramaiah, Vanmathi Chandrasekaran, Vinayakumar Ravi, Neeraj Kumar, “An intrusion detection system using optimized deep neural network architecture,” Transactions on Emerging Telecommunications Technologies, p. 9, 2020.

[12] Hossain, Md & Ghose, Dipayan & Partho, All & Ahmed, Minhaz & Chowdhury, Md Tanvir & Hasan, Mahamudul & Ali, Md & Jabid, Taskeed & Islam, Maheen. (2023). Performance Evaluation of Intrusion Detection System Using Machine Learning and Deep Learning Algorithms. 1-6. 10.1109/IBDAP58581.2023.10271964.

[13] Jose, Jinsi & Jose, Deepa. (2021). Performance Analysis of Deep Learning Algorithms for Intrusion Detection in IoT. 1-6. 10.1109/ICCISc52257.2021.9484979.

[14] Zarai, R., Kachout, M., Hazber, M. and Mahdi, M. (2020) Recurrent Neural Networks & Deep Neural Networks Based on Intrusion Detection System. Open Access Library Journal, 7, 1-11. doi:10.4236/oalib.1106151.

[15] B. Alsughayyir, A. M. Qamar and R. Khan, “Developing a Network Attack Detection System Using Deep Learning,” 2019 International Conference on Computer and Information Sciences (ICCIS), Sakaka, Saudi Arabia, 2019, pp. 1-5, doi: 10.1109/ICCISci.2019.8716389.

[16] S. A. Althubiti, E. M. Jones and K. Roy, “LSTM for Anomaly-Based Network Intrusion Detection,” 2018 28th International Telecommunication Networks and Applications Conference (ITNAC), Sydney, NSW, Australia, 2018, pp. 1-3, doi: 10.1109/ATNAC.2018.8615300.

[17] Y. Xiao, C. Xing, T. Zhang and Z. Zhao, “An Intrusion Detection Model Based on Feature Reduction and Convolutional Neural Networks,” in IEEE Access, vol. 7, pp. 42210-42219, 2019, doi:10.1109/ACCESS.2019.2904620.

[18] P. Wei, Y. Li, Z. Zhang, T. Hu, Z. Li and D. Liu, “An Optimization Method for Intrusion Detection Classification Model Based on Deep Belief Network,” in IEEE Access, vol. 7, pp. 87593-87605, 2019, doi: 10.1109/ACCESS.2019.2925828.

[19] Liu, Pengju. (2019). An Intrusion Detection System Based on Convolutional Neural Network. ICCAE 2019: Proceedings of the 2019 11th International Conference on Computer and Automation Engineering. 62-67. 10.1145/3313991.3314009.

[20] Sun, Pengfei & Liu, Pengju & Li, Qi & Liu, Chenxi & Lu, Xiangling & Hao, Ruochen & Chen, Jinpeng. (2020). DL-IDS: Extracting features using CNN-LSTM hybrid network for intrusion detection system. Security and Communication Networks. 2020. 1-11. 10.1155/2020/8890306.

[21] D. Liang and P. Pan, “Research on Intrusion Detection Based on Improved DBN-ELM,” 2019 International Conference on Communications, Information System and Computer Engineering

(CISCE), Haikou, China, 2019, pp. 495-499, doi: 10.1109/CISCE.2019.00115.

[22] Ugendhar, A. & Illuri, Babu & Vulapula, Sridhar Reddy & Radha, Marepalli & K, Sukanya & Alenezi, Fayadh & Althubiti, Sara & Polat, Kemal. (2022). A Novel Intelligent-Based Intrusion Detection System Approach Using Deep Multilayer Classification. Mathematical Problems in Engineering. 2022. 1-10. 10.1155/2022/8030510.

[23] N. Shone, T. N. Ngoc, V. D. Phai and Q. Shi, “A Deep Learning Approach to Network Intrusion Detection,” in IEEE Transactions on Emerging Topics in Computational Intelligence, vol. 2, no. 1, pp. 41-50, Feb. 2018, doi: 10.1109/TETCI.2017.2772792.

[24] Jordan, Michael & Mitchell, T.M. (2015). Machine Learning: Trends, Perspectives, and Prospects Science (New York, N.Y.). 349. 255-60. 10.1126/science.aaa8415.

[25] Mahesh, B. (2020) Machine Learning Algorithms—A Review. International Journal of Science and Research, 9, 381-386.

[26] Qifang Bi, Katherine E Goodman, Joshua Kaminsky, Justin Lessler, what is Machine Learning? A Primer for the Epidemiologist, AMERICAN JOURNAL OF EPIDEMIOLOGY, Volume 188, Issue 12, December 2019, Pages 2222–2239, https://doi.org/10.1093/aje/kwz189.

[27] Jordan, Michael I., and Tom M. Mitchell. “Machine learning: Trends, perspectives, prospects.” Science 349.6245 (2015): 255-260. And prospects.” Science 349.6245 (2015): 255-260.

[28] A Machine Learning Approach to Network Intrusion Detection System Using K Nearest Neighbor and Random Forest https://yourpastquestions.com/product/a-machine learning-approach-tonetwork-intrusion detection system.

[29] Salo, F., Nassif, A. B., & Essex, A. (2019). “Dimensionality reduction with IG-PCA and ensemble classifier for network intrusion detection.” Computer Networks, 148, 164-175.

[30] Murphy, K. P. (2012). Machine Learning: A Probabilistic Perspective. MIT Press. (Discusses probabilistic models, including Naive Bayes, with a detailed look at conditional independence and Bayesian methods).

[31] Tsai, C.-F., Hsu, Y.-F., Lin, C.-Y., & Lin, W.-Y. (2009). “Intrusion detection by machine learning: A review.” Expert Systems with Applications, 36(10), 11994-12000.

[32] Ahmadi, A., & Khosravi, A. (2019). “A review on KNN classification algorithm.” International Journal of Computer Science and Network Security, 19(5), 33-42.

[33] Chandola, V., Banerjee, A., & Kumar, V. (2009). “Anomaly detection: A survey.” ACM Computing Surveys (CSUR), 41(3), 1-58.

[34] Zhao, S., Zhang, B., Yang, J. et al. Linear discriminant analysis. Nat Rev Methods Primers 4, 70 (2024). https://doi.org/10.1038/s43586-024-00346.

[35] Chen, T., & Guestrin, C. (2016). XGBoost: A Scalable Tree Boosting System. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining (pp. 785–794).

[36] Zhao, Y., & Liu, Y. (2023). CopulaGAN boosted Random Forest based Network Intrusion Detection System for hospital network infrastructure. Proceedings of the IEEE International Conference on Communications (ICC), 1-6.

[37] Wang, Y., Liu, Z., Zheng, W., Wang, J., Shi, H., & Gu, M. (2023). A Combined MultiClassification Network Intrusion Detection System Based on Feature Selection and Neural Network Improvement. Applied Sciences, 13(14), 8307.

[38] J. He, L. Ding, L. Jiang, and L. Ma, “Kernel ridge regression classification”, Proceedings of the international Joint Conference on Neural Networks.2014, 2263-2267.

[39] K. Crammer, O. Dekel, J. Keshet, S. Shalev-Shwartz and Y. Singer, “Online passive-aggressive algorithms”, Journal of Machine Learning Research. 2006, 7,551–585.

[40] Hindy, H., Atkinson, R., Tachtatzis, C., Colin, J. N., Bayne, E., & Bellekens, X. (2020). Utilizing deep learning techniques for effective zero-day attack detection. Electronics, 9(10), 1684.

AUTHORS

SUDHANSHU SEKHAR TRIPATHY (Member, IEEE) received his Master of Computer Applications (MCA) degree in 2017 from Gandhi Institute for Technology (GIFT), affiliated with Biju Patnaik University of Technology (BPUT), Odisha, India. He is currently pursuing a Ph.D. in Computer Science and Engineering at C.V. Raman Global University, Bhubaneswar, Odisha. He has three years of industry experience and five years of academic teaching experience. His research work has been published in two Scopus-indexed and one Web of Scienceindexed journal and two UGC CARE Group 1 journals, focusing on cybersecurity with an emphasis on Network Intrusion Detection Systems. His research interests include machine learning, deep learning, network security, zero-day attack detection, and intrusion detection systems

BICHITRANANDA BEHERA (Member, IEEE) received his Ph.D. in 2022 from Pondicherry University, Puducherry, India. He is currently working as an assistant professor at C.V. Raman Global University, Bhubaneswar, Odisha, India. He has published numerous research articles in reputed journals and international conferences. His current research interests include machine learning, data mining, natural language processing (NLP), cybersecurity, and soft computing. He is actively engaged in the academic community and serves as a reviewer for various reputable journals and publications.