IJNSA 04

SCALABILITY ANALYSIS OF IOT-DAG DISTRIBUTEDLEDGERS USING PREFERENTIAL ATTACHMENT TOPOLOGY: A SIMULATION APPROACH

Peter Kimemiah Mwangi 1, Stephen T. Njenga 2and Gabriel Ndung’u Kamau 2

1Department of Information Technology, School of Computing Information Technology,

Murang’a University of Technology, Murang’a, Kenya

2 Department of Computer Science, School of Computing Information Technology,

Murang’a University of Technology, Murang’a, Kenya

ABSTRACT

Directed Acyclic Graph (DAG) based Distributed Ledger Technologies (DLTs) are being explored to address the scalability and energy efficiency challenges of traditional blockchain in IoT applications. The objective of this research was to gain insight into algorithms predicting how IoT-DAG DLT horizontal scalability changes with increasing node count in a heterogeneous ecosystem of full and light nodes. It specifically questioned how incorporating preferential attachment topology impacts IoT network scalability and performance, focusing on transaction throughput and energy efficiency. Using an Agent Based Modelling (ABM) simulation, the study evaluated a heterogeneous 1:10 full/light node network with Barabási Albert Preferential Attachment (PA-2.3) across increasing node counts (100-6400). Performance was measured by Confirmed Transactions Per Second (CTPS) and Mean Transaction Latency (MTL).Results showed CTPS scales linearly with node count (R² ≈ 1.000), exhibiting robust predictability. MTL increased logarithmically (R² ≈ 0.970), becoming more predictable as the network grew. Horizontal scalability showed exponential decay. The study confirms that IoT-DAG DLTs with preferential attachment can achieve predictable, near linear throughput horizontal scalability, highlighting that topology matters and optimising CTPS yields the highest throughput gains.

KEYWORDS

Direct Acyclic Distributed Ledger, Horizontal Scalability, Internet of Things, Net Logo, Model Simulation, Preferential Attachment Topology

1. INTRODUCTION

Directed Acyclic Graph (DAG) based Distributed Ledger Technologies (DLT) have been gaining attention for their potential to address the scalability and energy efficiency challenges faced by traditional blockchain-based systems in Internet of Things (IoT) applications[1]. Unliketraditional blockchain, e.g. Bitcoin [2], [3], which organises transactions into blocks, DAG structures transactions as vertices and edges, allowing for parallel processing and thus higher throughput. This makes DAG-based systems like IOTA Tangle[4]particularly suitable for IoT environments where numerous devices need to communicate frequently, securely and efficiently. The use of agent-based computational models is an emerging tool for empirical research to study behaviour among “bottom-up” models [2]. In agent-based modelling (ABM), each agent follows a set of rules and behaviours, and these agents collectively form a dynamic system that can help researchers gain insights into the emergent properties of complex systems. Agent-Based Modelling (ABM) tools are discrete event simulators which have shown increased popularity in the simulation of theoretical models such as virus propagation in [5], which is similar to message propagation in distributed systems. ABMs have been used recently in simulating IoT devices, as shown in recent studies by [3] and [4]. Large networked systems, such as the Internet of Things integrated with Distributed Ledger Technology (IoT-DLT), are a challenge when performing empirical experiments, due to the number of physical devices and the associated costs. ABM tools can be used to simulate an IoT-DLT environment and perform experiments on emergent behaviour while providing significant savings in material costs and time. The common goal of most generalised ABM simulators is to provide a layer of abstraction and permit modellers to focus on the development of agent-based models rather than on their implementation.

The key contribution of this research is to gain some insight into the algorithms that can predict how an IoT-DAG DLT scalability changes with increasing node count of a heterogeneous ecosystem of full and light nodes. The key research question of this research is

How does the incorporation of preferential attachment topology in a DAG-based DLT impact the scalability and performance of IoT networks, particularly in terms of transaction throughput and mean transaction latency for both full and light nodes?

The rest of this paper is organised as follows: Section 2, related studies, Section 3, the methodology used, Section 4, the results of the performance test, Section 5, a discussion analysing the results and Section 6, the conclusion of the research. The IoT-DAG-DLTSim Model is part of an ongoing study on improving the scalability of the IoT-DLT ecosystem.

2. RELATED STUDIES

2.1. Horizontal Scalability of Distributed Systems

Network Topology Studies in Distributed Systems, Preferential Attachment Topology: BarabásiAlbert Preferential Attachment (PA)[6] topology has been widely studied for its ability to model real-world networks with scale-free properties. The PA topology is characterised by a power-law degree distribution, which can be useful in studying the effects of node increases in distributed systems. Research has shown that preferential attachment can lead to more robust and scalable networks by creating a few highly connected nodes (hubs) that can efficiently distribute information. This topology can be particularly relevant in the context of IoT distributed ledgers, where scalability is a critical concern. Previous simulation studies have focused on evaluating the performance of different consensus algorithms and network topologies under varying conditions. For example, simulations have been used to assess the impact of node increases on network latency, throughput, and energy consumption. These studies provide valuable insights into the scalability challenges and potential solutions for distributed ledger technologies.

Research by[1]explored lightweight and scalable blockchain solutions optimised for IoT requirements. For example, the Lightweight Scalable Blockchain (LSB) aims to enhance scalability for IoT by reducing computational overhead. Similarly, the concept of using management hub nodes or high-resource devices to handle communications has been proposed to overcome the limitations of traditional blockchain in IoT settings. These approaches focus on minimising energy consumption and improving transaction-processing speed, which are critical for resource-constrained IoT devices.

In the review by [7] on the technical and security issues of integrating IoT and distributed ledgers, the authors identified the problem of scalability, which is characterised by the need to support an increasing load of transactions, in addition to the increasing number of nodes within the network

2.2. Scalability Performance Measurement

According to [8] the transaction processing time equation of a distributed system can be summarised as in Eq. (1)

where ti is the issuance time, tcis the confirmation time 𝑡𝑣is the validation time 𝑡𝑝𝑜𝑤is the PoW time, tnx is the network overhead, e.g. encryption/decryption, hashing and authentication. In [9], a study comparing various DLT designs, the authors identify three performance characteristics and their operationalisation shown in Table 1

Table 1. Example DLT Performance characteristics and measurement ([9])

Adapting the formula by [9] the scalability can be calculated by using the formula Eq. (1)toderive a formula for calculating scalability due to a change in the number of nodes, i.e. from 𝑘𝑖to 𝑘𝑖+∆𝑖

where the function𝜑(𝑘𝑖=[1..𝑁]) is the ratio at a given time at node one of the nodes 𝑖 = ∈ 1. . 𝑁. If the value of the 𝛹 is one(1), then scalability remains constant. If the 𝛹 reduces below one(1), then scalability has reduced; if more than one(1), then scalability has increased. While most studies focus on improving census protocols, energy efficiency, few examine the role light nodes play in IOT-DAG networks at scale. This research focused on studying the effect of node increase, with a heterogeneous mix of light and full nodes, using a simulation. This allows us to derive predictive metrics on the behaviour of the IoT-DAG-based DLT ecosystem. Though it explores using a laboratory environment, it nevertheless can provide insight into how networks behave as the number of nodes increases.

3. METHODS AND MATERIALS

3.1. Model Experimental Design and Setup

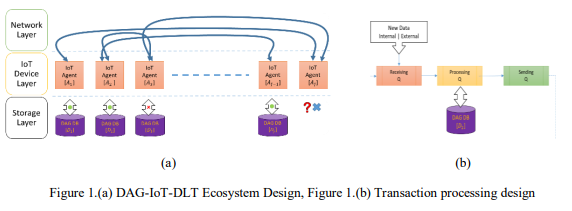

In the experiment, the performance of the IoT-DLT model was measured in terms of CTPS and MTL, and the information used to calculate the simulation model’s scalability using the formulas identified in (1) and (2). The Error! Reference source not found. shows a high-level design of the IoT-DAG-DLT ecosystem. The Error! Reference source not found.(a) shows a complete ecosystem with a set of Agents 𝐴 ∈ [𝐴1, 𝐴2 …𝐴𝑗 ], some with active ledgers while others are available/unavailable at specific times. Each agent can connect to other agents via the network area. The Error! Reference source not found.(b) shows the design of individual agents, which use a gossip-like protocol to receive messages, process them and send them to other agents.

Figure 1. (a) DAG-IoT-DLT Ecosystem Design, Figure 1.(b) Transaction processing design

During each epoch, each Agent randomly produced transactions with a probability of 0.01, while full-agent with a data store processes the transactions received or generated by updating the local database. A Gossip SIR[10] protocol was used to propagate the transactions to the neighbouring agents. New transactions are appended to the DAG database as new tips by attaching them to two new existing tips in the local database, after which they are Gossiped using the SIR [10]algorithm to the neighbouring agents for replication. When transactions are received for replication, the DAG ledger attaches them to the corresponding DAG tips if they have been received, otherwise, the DAG Ledger waits for the required tips. If it takes too long to get the required DAG transactions, the agents request them from the neighbouring agents using a Gossip SIR algorithm.

The key components of the agent model are:

Agents: Full-agents with a local DAG-Database, a light-agent with no DAG-Database

Agent network: Agents are interconnected using a scale-free random network using Barabási-Albert Preferential Attachment (PA) [11] algorithm, which simulates internetlike connections with k-degree of 2.3 as per empirical data from Barabási and Albert [6] on statistical mechanics of complex networks.

DAG Ledger: Each agent maintains a local database to store new transactions and replicate transactions of neighbouring agents. The DAG Ledger is built using an IOTA [12] like random algorithm.

Gossip Protocol: Agents send and receive messages using the random Gossip SIR model [10], which performs a push for new messages and a pull for any missing DAG tips based on the age of the updates.

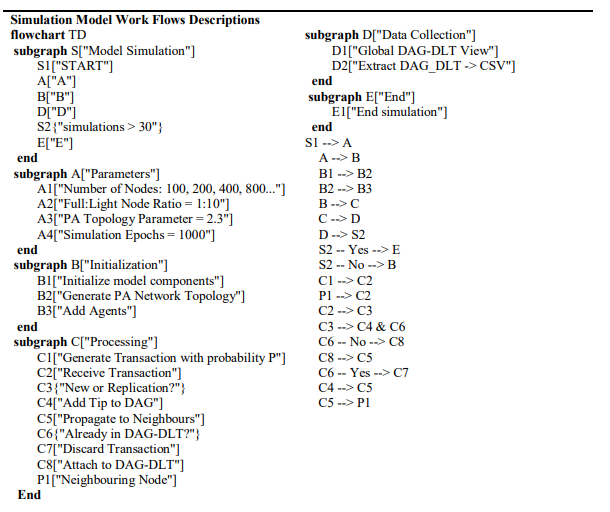

The Error! Reference source not found. shows the design of the IoT-DAG-DLTSim model, which simulates a decentralised ledger system using a directed acyclic graph (DAG) architecture within a scale-free network topology. Initialisation begins by defining critical parameters: network size (ranging from 100 to 6400 nodes), a 1:10 ratio of full to light nodes, and a powerlaw topology (γ = 2.3) to emulate real-world peer-to-peer networks. Each simulation epoch executes a transaction lifecycle where nodes probabilistically generate transactions, which are validated and appended to the DAG as new tips. Transactions may be received from neighbouring nodes, and the receiving agent checks if they are already attached and appends them to the DAG ledger or discards them if duplicates are detected. Valid transaction as sent to neighbouring nodes through a gossip-based propagation protocol. The model captures global state snapshots at each epoch, and at the end of the experiment, extracts performance metrics including Mean Transaction Latency (MTL), Confirmed Transactions Per Second (CTPS). To ensure statistical reliability, the simulation iterates 30 times per parameter set, with outputs exported to structured datasets for analysis. This design enables the study of emergent behaviours in heterogeneous networks while quantifying trade-offs between latency, throughput, and network growth dynamics under varying conditions.

Figure 2. Model design flow diagram, showing simulation flow with setup and simulation process per node

The descriptive flow diagram in Mermaid[13] code format is shown in Figure 3, There are two key parts: the simulation model setup used to select the parameters used to run the simulation and select the number of iterations and the run part, which executes the simulation and collects the data.

Figure 3. Mermaid pseudo code

Each full node stores its own transactions. To minimise memory and improve performance, a binary structure was used to store each transaction. An existing binary string extension was modified to accommodate this new requirement. A data structure was designed to store the global view of all the full nodes, to allow capture of confirmed transactions per second and the mean transaction latency.

3.2. PA Model Configuration

APA of 2.3 seven (7) network topology configurations were created for 10, 20, 40, 80, 160, 320 and 640 full modes. Then 90, 180, 360, 720, 1440, 2880 and 5760 light nodes respectively were singularly connected randomly to each of the full nodes. A static configuration of the PA model was used to allow for reproducibility of results

Figure 4. PA topologies k-degree =2.3 showing full and light agents connections, for seven (7) topologies [ (a) = 100, (b) = 200, (c) = 400, (d) = 800, (e) = 1600, (f) = 3200, (g) = 6400 agents respectively

3.3. Model Parameters

The Table 2shows the parameters available and used to set up and run the experiment. The input parameters determine the size of the network and are changed during successive experiments using the NetLogo “Behaviour Space” Tool. The remaining inputs remain the same.

Table 2. Model input and output parameters

3.4. Equipment Configuration

The model was implemented using the popular NetLogo 6.3 ABM tool based on Java Virtual Machine (JVM), allowing operations on both Windows and Linux-based system. The Table 3 indicates the configurations of the equipment configuration.

Table 3. Experimental computing tools, setup and configuration

3.5. Model Execution

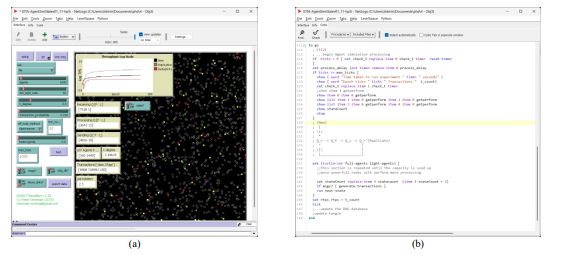

The simulation interface on NetLogo 6.3 is shown in

(a) along with the main execution code in

(b). NetLogo has a behaviour tool that allows for running multiple experimental runs, using various parameters unattended.

Figure 5. Simulation screen Netlogo 6.3 configured for 1,600 nodes, with full:light node ratio of 1:10, PA of 2.3.

Each model was run 30 times for varying network sizes of 𝑁𝑡𝑜𝑡𝑎𝑙100, 200, 400, 800, 1600, 3200 and 6400 IoT agents. In this experiment, all other parameters were kept constant, i.e. [𝑁𝑓𝑢𝑙𝑙, 𝑁𝑙𝑖𝑔ℎ𝑡 ] = [1: 10], 𝜅 = 2.3, 𝜆 = 0.01, 𝜄 = [1,1000], 𝐻𝑜𝑚𝑜𝑔𝑒𝑛𝑡𝑖𝑡𝑦 = 1. A total of 30 runs x 7 agent configurations = 210 iterations were performed on NetLogo ABM on a Toshiba i3 running Windows 10 64-bit and 12 GB of RAM.

3.6. Model Dataset

The data was extracted from the Global DAG Ledger, and 840 records were collected for the experiment.

exhibits characteristics of data collected that included the CTPS and MTL calculated along with be respective standard deviation, standard error, kurtoses, skewness and number of transactions over 1000 epochs.The data was gathered from the pseudo-DAG-database stored in a NetLogo [14] Table structure into CSV files, which are then uploaded into Julia 1.10. [15] DataFrames.jl in for analysis using Julia 1.10 Curvefit.jl, HypothesisTests.jl, GLM.jl, StatsBase.jl, StatsModels.jl libraries and GLMarkie.jl, StatsPlots.jl for producing the graphical outputs.

Figure 6. Onscreen simulation model output for 1600 nodes and 1:10 full:light node ratio, with a PA of 2.3 and a transaction arrival rate probability of 0.01 over all nodes

4. RESULTS

The following section presents the results of the simulation analysis, starting with runtime details, descriptive summaries, and inferential insights. The results were also visualised using line graphs.

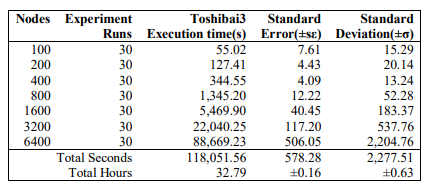

4.1. Simulation Runtime

At the onset, a comprehensive simulation was conducted across varying node counts to evaluate the impact on key performance metrics, including CTPS, MTL, and horizontal scalability. The experiment took a total of 32.79 hours with a standard error (SE) of 0.16 and a standard deviation (SD) of0.63 to run the complete sets of simulations as shown in Table 4.

Table 4. Full experiment runtimes in seconds(s) 30 runs per node count

Each simulation was run 30times in order to obtain a mean average due to the stochastic behaviour of the IoT Devices. Both SE and SD increase with larger node counts (e.g., from 100 to 6400 nodes), which is expected because runtime grows exponentially (e.g., 1.591s → 2946.44s), so variability scales proportionally. This adequately reflects horizontal scaling.The relative error (SE/μ) remains small (e.g., for 6400 nodes: SE/μ = 14.138/2946.44 ≈ 0.48%), suggesting consistent precision despite larger runtimes.

4.2. Descriptive Analysis of CTPS and MTL

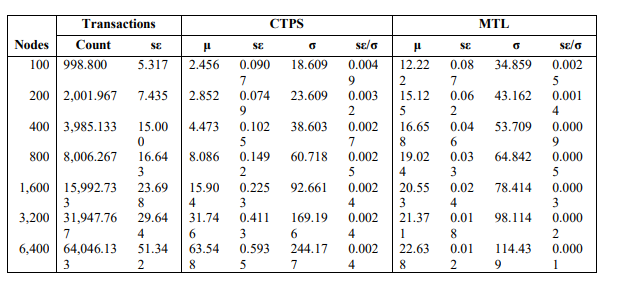

This section provides a descriptive analysis of the CTPS and MTL behaviour of the IoT-DAG model based on data collected over 30 experiment runs. The Table 5summarises the descriptive statistics for CTPS and MTL collected over 30experiment runs, for a PA of 2.3 for full nodes and a client-server connection for light nodes, with a full-to-light nodes ratio of 1:10 and a transaction probability of 0.01 for each node. The symbol μ is the mean, the σ is the standard deviation, and sε is the standard error calculated for each experiment set. The transactions count represents the mean number of transactions processed by each full node.

The data in Table 5demonstrates strong linear scalability with increasing network size (nodes), as evidenced by:

Near-perfect doubling of transaction throughput (Count) as nodes increased from 100 to

6,400 (998.8 → 64,046.1), validating the efficiency of the BA-2.3 topology.

CTPS scaled linearly (μ: 2.456 → 63.548), with tight confidence intervals (low *σε/σ*

ratios < 0.005), indicating robust predictability.

The stability of *σε/σ* across node sizes (e.g., 0.0024–0.0049 for CTPS) suggests the

model’s performance variability is independent of network scale—a critical feature for

IoT deployments.

On Latency (MTL) behaviour, MTL increased logarithmically with node count (μ:

12.222 → 22.638), reflecting the expected trade-off between network size and

propagation delay.

Declining *σε/σ* ratios (0.0025 → 0.0001) show that latency becomes more predictable

as the network grows, likely due to the PA model’s hub-dominated routing.

Table 5. IoT-DAG DLT Simulation Model: Descriptive statistics for CTPS and MTL for 30 runs per node, PA = 2.3, transaction probability 0.01/node, full:light ratioof 1:10

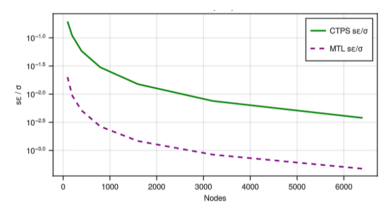

The Figure 7 shows the relative errors of the transaction CTPS and MTL data over the node count. The practical implications are that though larger networks introduce higher latency, the diminishing variability (*σε/σ*) suggests stable performance bounds for IoT applications

Figure 7. Relative errors of the transaction CTPS and MTL data over the horizontal scalability overvarying number of IoT nodes

The models derived show robustness as indicated by:

Low standard errors (σε) for CTPS and MTL (e.g., CTPS σε:0.0907 → 0.5935) confirm the simulation’s reliability across 30 runs.

Consistent *σε/σ* ratios (e.g., ~0.0024 for CTPS at *n* ≥ 800) imply the model’s stochastic elements (e.g., transaction probability 0.01/node) do not disproportionately affect outcomes at scale.

Linear CTPS scaling in Figure 8 assumes perfect resource allocation; future studies should incorporate network and compute limits.

4.3. Model Fitting

Table 6 presents the relative standard error (RSE) percentages, model estimates, and model fit (R²) for different parameters in the analysis. The Figure 8 plots the values of Table 6 and calculates the range of the SE percentage, the Model Estimate and the type of model and R2 fit for each variable,

Table 6. Relative SE, model estimation and model fits R2

For the transactions, the RSE range is very low (0.080% to 0.532%), indicating high precision in the measurements. On CTPS (Confirmed Transactions Per Second), the RSE range (0.243% to 0.487%) remains low, reinforcing model reliability.

Figure 8. Visual representation of the model and curve fit for mean CTPS,MTL and their corresponding R2 values for transaction arrival of probability 0.01 per node full:light ratio of 1:10 for 30 runs per node count.

The linear model (CTPS ≈ 0.699 + 10⁻² × nodes) fits the data almost perfectly (R² ≈ 1.000), implying a strong direct relationship between CTPS and node count. The MTL, the RSE is extremely low (0.010% to 0.250%), indicating very precise estimates.In Figure 8 the logarithmic model (MTL ≈ 15.882 + 10⁻³ × ln(nodes)) shows a good fit of R² ≈ 0.970, confirming that MTL increases logarithmically with node count, consistent with diminishing returns at scale. Scalability Estimation.

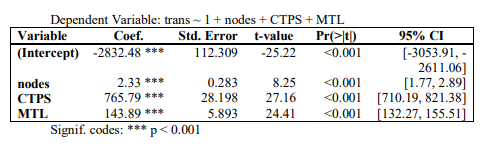

As indicated in Table 7, all predictors—network size (nodes), CTPS, and MTL, had statistically significant effects on transaction throughput (*p* < 0.001). The model explained [R²]% of the variance in throughput, indicating strong predictive power. Each additional node increased throughput by ~2.33 transactions, supporting the scalability of preferential attachment networks in IoT DAG-DLTs. This aligns with Barabási Albert (PA) topology principles, where larger networks sustain higher activity. Throughput was most sensitive to CTPS with every unit increase in transactions per second boosted throughput by ~766 transactions. This underscores the critical role of processing speed in IoT ledger performance. Not surprisingly, higher MTL correlated with increased throughput. This may reflect network buffering effects, where delays allow more transactions to accumulate before validation. However, this warrants furthe investigation to rule out confounding factors.

Table 7. Linear regression results predicting transaction throughput (trans) from network nodes, CTPS, and MTL

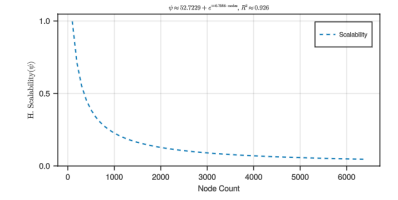

Scalability (ψ) Measurement over node growth using CTPS/MTL ratio is shown inalong with the curve fit and its respective 𝑅2 Horizontal Scalability (ψ) shows an exponential decay model (ψ ≈ 52.723 + e⁻⁰·⁷⁹⁶ × nodes) fits reasonably well (R² ≈ 0.926), implying that scalability benefits diminish rapidly as nodes increase.

Figure 9. Analysis of effects of nodes on CTPS and MTL with curve fit and R2 value.

Estimation of the scalability of the IoT-DAG model through linear regression analysis was conducted. This evaluated the relationship between transaction throughput, network nodes, transactions per second (CTPS), and message latency (MTL), providing insights into the factors influencing performance in large-scale IoT deployments. The regression results highlight the significant predictive power of these variables, offering a deeper understanding of how throughput is impacted by network size, processing speed, and latency in the system as indicated in Table 7 and Figure. 9

For IoT applications, these results suggest:

Topology matters: PA networks scale well with node growth.

Hardware/software improvements to transaction processing will yield the higher

throughput gains.

Controlled delays might improve throughput but could compromise real-time

performance.

5. CONCLUSION

The study confirms that IoT-DAG DLTs with preferential attachment (BA-2.3) and a 1:10 full/light node ratio can achieve predictable, near-linear scalability in throughput (CTPS) with modest latency growth. However, the model assumes ideal conditions; real-world deployments must account for physical constraints not captured here.

This research has demonstrated that, for the simulation models, CTPS scales linearly with node count, while MTL follows a logarithmic trend, indicating that adding more nodes yields diminishing gains in transaction load capacity. On horizontal scalability (ψ), the results exhibit exponential decay, which supports that initial node additions improve performance significantly, while the marginal benefit decreases sharply at higher node counts.

The high R² values and low RSE ranges suggest strong model reliability for CTPS and MTL, while the scalability model (ψ) provides useful but slightly less precise predictions. These findings can guide system design decisions, particularly in optimising node deployment for performance and scalability trade-offs.

REFERENCES

[1] A. Cullen, P. Ferraro, C. King, and R. Shorten, ‘On the Resilience of DAG-Based Distributed Ledgers in IoT Applications’, IEEE Internet Things J., vol. 7, no. 8, pp. 7112–7122, Aug. 2020, doi:10.1109/JIOT.2020.2983401.

[2] H. Hellani, L. Sliman, A. E. Samhat, and E. Exposito, ‘Tangle the Blockchain:Towards Connecting Blockchain and DAG’, in 2021 IEEE 30th International Conference on Enabling Technologies: Infrastructure for Collaborative Enterprises (WETICE), Bayonne, France: IEEE, Oct. 2021, pp. 63– 68. doi: 10.1109/WETICE53228.2021.00023.

[3] S. A. Bragadeesh, S. M. Narendran, and A. Umamakeswari, ‘Securing the Internet of Things Using Blockchain’, in Essential Enterprise Blockchain Concepts and Applications, 1st ed., K. Saini, P. Chelliah, and D. Saini, Eds., Auerbach Publications, 2021, pp. 103–122. doi: 10.1201/9781003097990-6.

[4] J. Rosenberger, F. Rauterberg, and D. Schramm, ‘Performance study on IOTA Chrysalis and Coordicide in the Industrial Internet of Things’, in 2021 IEEE Global Conference on Artificial Intelligence and Internet of Things (GCAIoT), Dubai, United Arab Emirates: IEEE, Dec. 2021, pp. 88–93. doi: 10.1109/GCAIoT53516.2021.9692985.

[5] A. Mohammed, H. A. Jamil, S. Mohd Nor, and M. NadzirMarsono, ‘Malware Risk Analysis on the Campus Network with Bayesian Belief Network’, Int. J. Netw. Secur. Its Appl., vol. 5, no. 4, pp. 115–128, Jul. 2013, doi: 10.5121/ijnsa.2013.5409.

[6] R. Albert and A.-L. Barabási, ‘Statistical mechanics of complex networks’’, Rev Mod Phys, vol. 74, no. 1, pp. 47-97, Jan. 2002, doi: 10.1103/RevModPhys.74.47.

[7] A. Cecilia Eberendu and T. Ifeanyi Chinebu, ‘Can Blockchain be a Solution to IoT Technical and Security Issues’, Int. J. Netw. Secur. Its Appl., vol. 13, no. 6, pp. 123–132, Nov. 2021, doi: 10.5121/ijnsa.2021.13609.

[8] S. Park, S. Oh, and H. Kim, ‘Performance Analysis of DAG-Based Cryptocurrency’, in 2019 IEEE International Conference on Communications Workshops (ICC Workshops), May 2019, pp. 1–6. doi: 10.1109/ICCW.2019.8756973.

[9] F. Gräbe, N. Kannengießer, S. Lins, and A. Sunyaev, ‘Do Not Be Fooled: Toward a Holistic Comparison of Distributed Ledger Technology Designs’, presented at the Hawaii International Conference on System Sciences, 2020. doi: 10.24251/HICSS.2020.770.

[10] A. Banerjee, A. G. Chandrasekhar, E. Duflo, and M. O. Jackson, ‘Using Gossips to Spread Information: Theory and Evidence from a Randomized Controlled Trial’, ArXiv14062293 Phys., May 2017, Accessed: Mar. 14, 2022. [Online]. Available: http://arxiv.org/abs/1406.2293

[11] A.-L. Barabási, R. Albert, and H. Jeong, ‘Scale-free characteristics of random networks: the topology of the world-wide web’, Phys. Stat. Mech. Its Appl., vol. 281, no. 1–4, pp. 69–77, Jun.

2000, doi: 10.1016/S0378-4371(00)00018-2.

[12] C. Fan, ‘Performance Analysis and Design of an IoT-Friendly DAG-based Distributed Ledger System’, Masters, University of Alberta, Department of Electrical and Computer Engineering, 2019. doi: 10.7939/r3 21yj-3545.

[13] K. Sveidqvist and contributors, Mermaid.js: Markdown-inspired diagramming tool. (2020). [Online]. Available: https://mermaid-js.github.io

[14] Q. A. Chaudhry, ‘An introduction to agent-based modeling modeling natural, social, and engineered complex systems with NetLogo: a review’, Complex Adapt. Syst. Model., vol. 4, no. 1, pp. 11, s40294-016-0027–6, Dec. 2016, doi: 10.1186/s40294-016-0027-6.

[15] M. Bouchet-Valat and B. Kamiński, ‘DataFrames.jl : Flexible and Fast Tabular Data in Julia’, J. Stat. Softw., vol. 107, no. 4, 2023, doi: 10.18637/jss.v107.i04.

AUTHORS

Mr. Peter Kimemiah Mwangi is presently a postgraduate PhD. research student and an assistant lecturer in the Information Technology department at the Murang’a University of Technology, Main Campus, Murang’a County, Kenya. He has 12 years’ experience as an assistant lecturer and 22 years working in various senior positions in the IT Industry. He received his Master’s in Information Systems from the University of Phoenix, Arizona, United States of America and a BEd. Mathematics and Physics from Kenyatta University, Nairobi, Kenya. His research interests include ERPs databases, the Internet of Things, computing infrastructure and networks.

Dr. Stephen T. Njenga is presently working as a Computer Science faculty member at the Murang’a University of Technology, Main Campus, Murang’a County, Kenya. He has 11 years of experience as a faculty member. He received his PhD in Information Systems from The University of Nairobi, Kenya. He received a Master’s of Computer Science from The University of Nairobi, and a B.Sc. in Computer Science from Egerton University. His key research interest includes artificial intelligence, agent-based modelling and distributed ledger technology.

Dr. Gabriel Ndung’u Kamau is a Senior Lecturer at Murang’a University of Technology, with a Bachelor of education in Mathematics and Business from Kenyatta University, an MBA (MIS), and a PhD in Business Administration (Strategic Information Systems) from the University of Nairobi. He has over 10 years of teaching and administrative experience, including serving as Director of ODeL at his university. His research focuses on ICT4D, information systems philosophy, computer security, and disruptive technologies. Dr. Kamau has numerous publications, supervises PhD and Master’s students to completion, and is a member of AIS and ACPK. He has also received professional training in ISO auditing, network security, DAAD NMT-DIES and digital education